thx for Link

have installled Runtime and re-boot PC and put llama64.dll and phi-2_Q4_K_M.gguf into same Folder like EXE

but still have no luck and got Error to load llama64.dll/phi-2_Q4_K_M.gguf ...

which FWH Version is need for that Sample

Code: Select all | Expand

int main(int argc, char ** argv) {

gpt_params params;

if (argc == 1 || argv[1][0] == '-') {

printf("usage: %s MODEL_PATH [PROMPT]\n" , argv[0]);

return 1 ;

}

if (argc >= 2) {

params.model = argv[1];

}

if (argc >= 3) {

params.prompt = argv[2];

}

if (params.prompt.empty()) {

params.prompt = "Hello my name is";

}

// total length of the sequence including the prompt

const int n_len = 32;Code: Select all | Expand

typedef void (*PFUNC) (char* szToken);

extern "C" __declspec (dllexport) int Llama(char* szModel, char* szPrompt, PFUNC pCallBack) {

gpt_params params;

params.model = szModel;

params.prompt = szPrompt;

params.sparams.temp = 0.7;

// total length of the sequence including the prompt

const int n_len = 512;

Code: Select all | Expand

// LOG_TEE("%s", llama_token_to_piece(ctx, new_token_id).c_str());Code: Select all | Expand

pCallBack((char*)llama_token_to_piece(ctx, new_token_id).c_str());have download latest FWH_tools-master.zip and use new (bigger) llama64.dll but still got Error MessageAntonio Linares wrote: solved,

please download this llama64.dll:

https://github.com/FiveTechSoft/FWH_too ... lama64.dll

thx for Answeralerchster wrote:I only ever had error 0 in the program with the downloaded DLL.

Then I created llama64.dll myself according to the information above and it worked immediately.

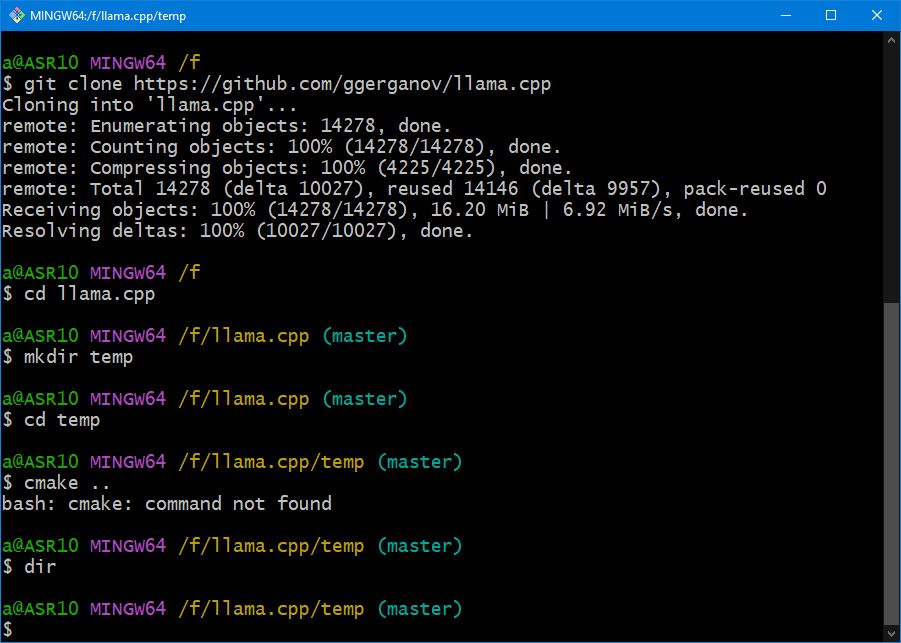

Dear Jimmy, you have to download and install cmake:Jimmy wrote:hi,

need Help to create llama64.dll

have download and install "GIT for Windows"

after Setup a small Bash Console Window open

but i got stuck at "5. cmake .."

please advice me what to do

Code: Select all | Expand

#include "Fivewin.ch"

function Main()

local oDlg, cPrompt := PadR( "List 10 possible uses of AI from my Windows apps.", 200 )

local cAnswer := "", oAnswer, oBtn

DEFINE DIALOG oDlg SIZE 700, 500 TITLE "FWH AI"

@ 1, 1 GET cPrompt SIZE 300, 15

@ 3, 1 GET oAnswer VAR cAnswer MULTILINE SIZE 300, 200

@ 0.7, 52.5 BUTTON oBtn PROMPT "start" ;

ACTION ( oBtn:Disable(), Llama( "phi-2_Q4_K_M.gguf", RTrim( cPrompt ),;

CallBack( { | cStr | oAnswer:SetFocus(), oAnswer:Append( cStr ) } ) ),;

oBtn:Enable(), oBtn:SetFocus() )

@ 2.2, 52.5 BUTTON "Clear" ACTION oAnswer:SetText( "" )

ACTIVATE DIALOG oDlg CENTERED

return nil

DLL FUNCTION Llama( cModel AS LPSTR, cPrompt AS LPSTR, pFunc AS PTR ) AS VOID PASCAL LIB "llama64.dll"thx for Answer,alerchster wrote: cmake is part of VS!

After step 4 at the latest, depending on the VS version, "%ProgramFiles%\Microsoft Visual Studio\2022\Community\VC\Auxiliary\Build\vcvarsall.bat" amd64 should be executed, otherwise "cmake .." would not work.

i´m not sure about "Callback" under HMGAntonio Linares wrote: Could you kindly adapt this code to HMG ?