Page 2 of 2

Re: Artificial intelligence - Class TPerceptron

Posted: Fri May 19, 2017 8:04 am

by Enrico Maria Giordano

rhlawek wrote:I've been looking for some old source code to prove it to myself but this looks very similar to what I was taught as Predictor/Corrector methods back in the mid-80s

Yes, it's a very old concept. But still interesting.

EMG

Re: Artificial intelligence - Class TPerceptron

Posted: Fri May 19, 2017 10:35 am

by Antonio Linares

Pedro Domingos name them "learners": software that can "learn" from data.

The simplest way of learning from data is comparing two bytes. How ? Substracting them: A zero means they are equal, different from zero means they are different.

The difference between them is the "error". To correct the error, we modify a "weight" . Its amazing that from that simple concept, all what can be built. In the same way all our software technology comes from a bit, being zero or one.

The perceptron mimics (in a very simple way) the behavior of a brain neuron. The neuron receives several inputs, each one has a weight (stored at the neuron) and the sum of all those inputs times their weights may fire or not an output.

Backpropagation helps to fine tune those weights, and finally the perceptron "adjusts" itself to the right weight for each input to produce the expected output.

AI is already everywhere and will change very much our lives and the way software is developed

Re: Artificial intelligence - Class TPerceptron

Posted: Tue May 23, 2017 8:49 am

by Antonio Linares

Re: Artificial intelligence - Class TPerceptron

Posted: Tue May 23, 2017 9:33 am

by Antonio Linares

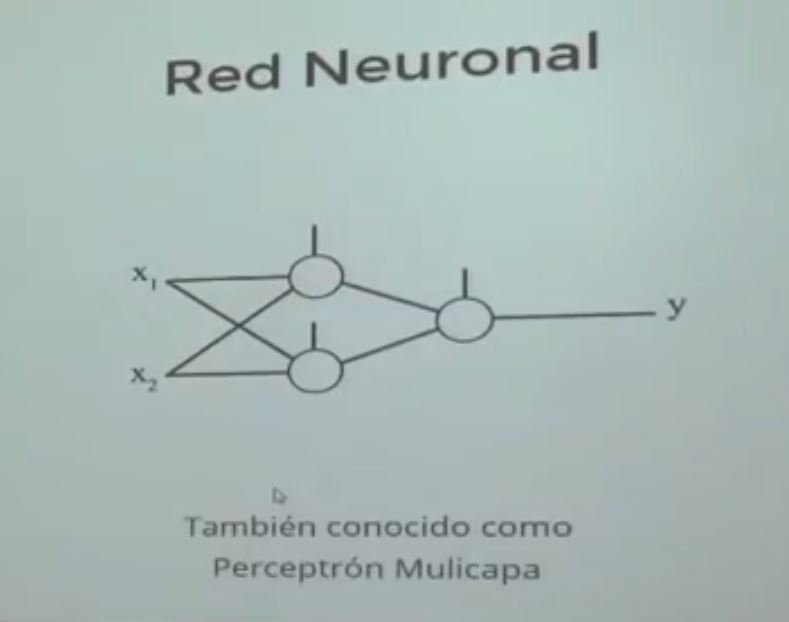

Perceptrón

Multicapa

Re: Artificial intelligence - Class TPerceptron

Posted: Fri May 26, 2017 6:37 pm

by Antonio Linares

Re: Artificial intelligence - Class TPerceptron

Posted: Fri May 26, 2017 8:05 pm

by Antonio Linares

David Miller C++ code ported to Harbour:

viewtopic.php?p=202115#p202115Don't miss to try your first neural network

Re: Artificial intelligence - Class TPerceptron

Posted: Thu Jun 01, 2017 4:12 pm

by Antonio Linares

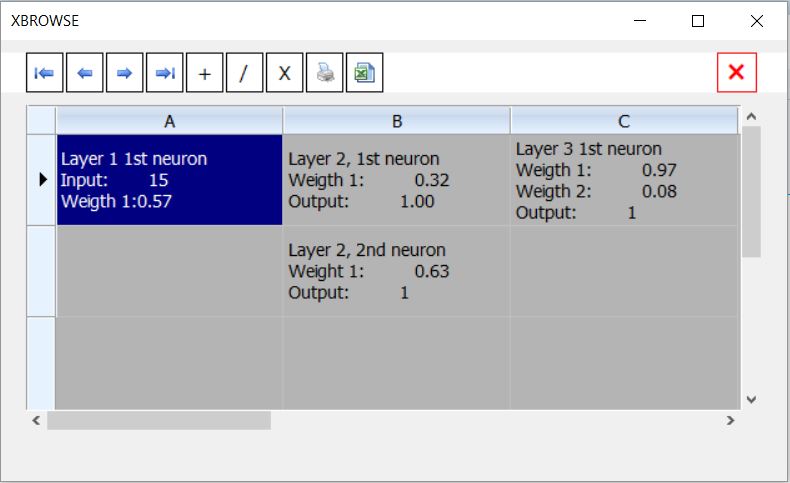

Inspecting the neural network:

Code: Select all | Expand

#include "FiveWin.ch"

function Main()

local oNet := TNet():New( { 1, 2, 1 } ), n

local x

while oNet:nRecentAverageError < 0.95

oNet:FeedForward( { x := nRandom( 1000 ) } )

oNet:Backprop( { If( x % 5 == 0, 5, 1 ) } )

end

oNet:FeedForward( { 15 } )

XBROWSER ArrTranspose( { "Layer 1 1st neuron" + CRLF + "Input:" + Str( oNet:aLayers[ 1 ][ 1 ]:nOutput ) + ;

CRLF + "Weigth 1:" + Str( oNet:aLayers[ 1 ][ 1 ]:aWeights[ 1 ], 4, 2 ), ;

{ "Layer 2, 1st neuron" + CRLF + "Weigth 1: " + Str( oNet:aLayers[ 2 ][ 1 ]:aWeights[ 1 ] ) + ;

CRLF + "Output: " + Str( oNet:aLayers[ 2 ][ 1 ]:nOutput ),;

"Layer 2, 2nd neuron" + CRLF + "Weight 1: " + Str( oNet:aLayers[ 2 ][ 2 ]:aWeights[ 1 ] ) + ;

CRLF + "Output: " + Str( oNet:aLayers[ 2 ][ 2 ]:nOutput ) },;

"Layer 3 1st neuron" + CRLF + "Weigth 1: " + Str( oNet:aLayers[ 3 ][ 1 ]:aWeights[ 1 ] ) + ;

CRLF + "Weigth 2: " + Str( oNet:aLayers[ 3 ][ 1 ]:aWeights[ 2 ] ) + ;

CRLF + "Output: " + Str( oNet:aLayers[ 2 ][ 2 ]:nOutput ) } ) ;

SETUP ( oBrw:nDataLines := 4,;

oBrw:aCols[ 1 ]:nWidth := 180,;

oBrw:aCols[ 2 ]:nWidth := 180,;

oBrw:aCols[ 3 ]:nWidth := 180,;

oBrw:nMarqueeStyle := 3 )

return nil

Re: Artificial intelligence - Class TPerceptron

Posted: Sat Jun 24, 2017 5:51 am

by Antonio Linares

Teaching a perceptron to multiply a number by 2:Code: Select all | Expand

#include "FiveWin.ch"

function Main()

local oNeuron := TPerceptron():New( 1 )

local n, nValue

for n = 1 to 50

oNeuron:Learn( { nValue := nRandom( 1000 ) }, ExpectedResult( nValue ) )

next

MsgInfo( oNeuron:aWeights[ 1 ] )

MsgInfo( oNeuron:Calculate( { 5 } ) )

return nil

function ExpectedResult( nValue )

return nValue * 2

CLASS TPerceptron

DATA aWeights

METHOD New( nInputs )

METHOD Learn( aInputs, nExpectedResult )

METHOD Calculate( aInputs )

ENDCLASS

METHOD New( nInputs ) CLASS TPerceptron

local n

::aWeights = Array( nInputs )

for n = 1 to nInputs

::aWeights[ n ] = 0

next

return Self

METHOD Learn( aInputs, nExpectedResult ) CLASS TPerceptron

local nSum := ::Calculate( aInputs )

if nSum < nExpectedResult

::aWeights[ 1 ] += 0.1

endif

if nSum > nExpectedResult

::aWeights[ 1 ] -= 0.1

endif

return nil

METHOD Calculate( aInputs ) CLASS TPerceptron

local n, nSum := 0

for n = 1 to Len( aInputs )

nSum += aInputs[ n ] * ::aWeights[ n ]

next

return nSum

Re: Artificial intelligence - Class TPerceptron

Posted: Wed Jun 28, 2017 4:07 pm

by Silvio.Falconi

Re: Artificial intelligence - Class TPerceptron

Posted: Mon Jul 17, 2017 3:47 am

by Antonio Linares

Re: Artificial intelligence - Class TPerceptron

Posted: Fri Jul 21, 2017 3:55 am

by Antonio Linares

Re: Artificial intelligence - Class TPerceptron

Posted: Sun Jul 23, 2017 9:11 am

by Antonio Linares

Scaled value: ( Input Value - Minimum ) / ( Maximum - Minimum )

Descaled value (Input Value): ( Scaled value * ( Maximum - Minimum ) ) + Minimum

Re: Artificial intelligence - Class TPerceptron

Posted: Sun Jul 23, 2017 9:52 am

by Antonio Linares

Test of scaling and descaling values:

Scaling: ( value - minimum ) / ( Maximum - Minimum )

0 --> ( 0 - 0 ) / ( 9 - 0 ) --> 0

1 --> ( 1 - 0 ) / ( 9 - 0 ) --> 0.111

2 --> ( 2 - 0 ) / ( 9 - 0 ) --> 0.222

3 --> ( 3 - 0 ) / ( 9 - 0 ) --> 0.333

4 --> ( 4 - 0 ) / ( 9 - 0 ) --> 0.444

5 --> ( 5 - 0 ) / ( 9 - 0 ) --> 0.555

6 --> ( 6 - 0 ) / ( 9 - 0 ) --> 0.666

7 --> ( 7 - 0 ) / ( 9 - 0 ) --> 0.777

8 --> ( 8 - 0 ) / ( 9 - 0 ) --> 0.888

9 --> ( 9 - 0 ) / ( 9 - 0 ) --> 1

Re: Artificial intelligence - Class TPerceptron

Posted: Fri Aug 04, 2017 5:35 am

by Antonio Linares

In TensorFlow we have the

Softmax function which transforms the output of each unit to a value between 0 and 1, and makes the sum of all units equals 1. It will tell us the probability of each category

https://medium.com/@Synced/big-picture-machine-learning-classifying-text-with-neural-networks-and-tensorflow-da3358625601

Re: Artificial intelligence - Class TPerceptron

Posted: Sat Sep 02, 2017 7:23 pm

by Carles

Hola !

Articulo interesante que ayuda a entrar en este mundillo...

https://blogs.elconfidencial.com/tecnol ... n_1437007/Saludetes.