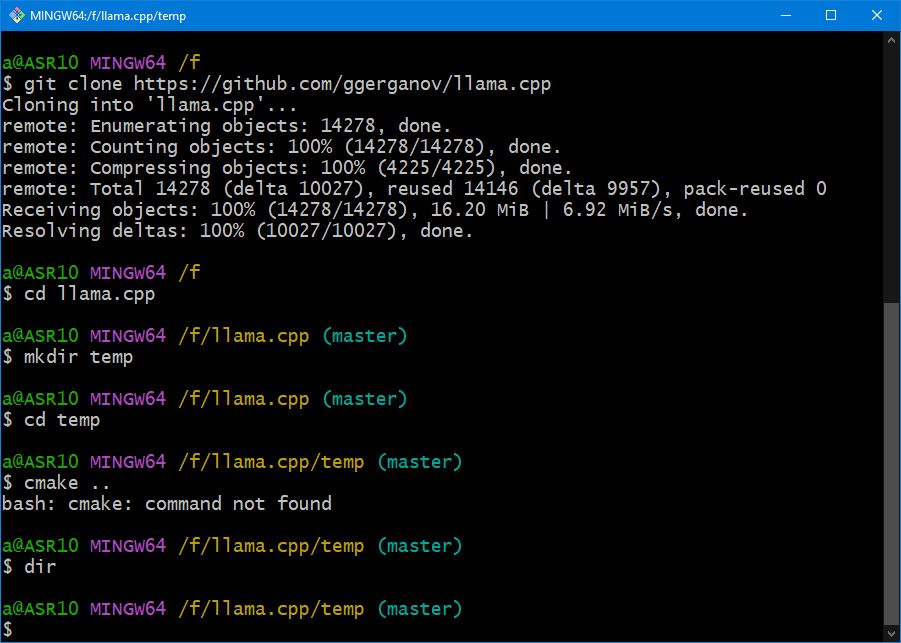

Steps to build llama64.dll:

1. git clone

https://github.com/ggerganov/llama.cpp2. cd llama.cpp

3. mkdir temp

4. cd temp

5. cmake ..

6. open created llama.cpp.sln using Visual Studio and select "Release" at the top bar

7. On the project tree, right click on build_info, select properties and in C/C++ "code generation", "runtime library" select "Multi-threaded (/MT)"

8. On the project tree, right click on common, select properties and in C/C++ "code generation", "runtime library" select "Multi-threaded (/MT)"

8. On the project tree, right click on ggml, select properties and in C/C++ "code generation", "runtime library" select "Multi-threaded (/MT)"

10. On the project tree, right click on llama, select properties and in C/C++ "code generation", "runtime library" select "Multi-threaded (/MT)"

11. On the project tree, right click on simple, select properties and in C/C++ "code generation", "runtime library" select "Multi-threaded (/MT)"

12. On the project tree, click on simple and edit simple.cpp

13. Replace this code:

- Code: Select all Expand view

int main(int argc, char ** argv) {

gpt_params params;

if (argc == 1 || argv[1][0] == '-') {

printf("usage: %s MODEL_PATH [PROMPT]\n" , argv[0]);

return 1 ;

}

if (argc >= 2) {

params.model = argv[1];

}

if (argc >= 3) {

params.prompt = argv[2];

}

if (params.prompt.empty()) {

params.prompt = "Hello my name is";

}

// total length of the sequence including the prompt

const int n_len = 32;

with this one:

- Code: Select all Expand view

typedef void (*PFUNC) (char* szToken);

extern "C" __declspec (dllexport) int Llama(char* szModel, char* szPrompt, PFUNC pCallBack) {

gpt_params params;

params.model = szModel;

params.prompt = szPrompt;

params.sparams.temp = 0.7;

// total length of the sequence including the prompt

const int n_len = 512;

14. Replace:

- Code: Select all Expand view

// LOG_TEE("%s", llama_token_to_piece(ctx, new_token_id).c_str());

with:

- Code: Select all Expand view

pCallBack((char*)llama_token_to_piece(ctx, new_token_id).c_str());

15. On the project tree, right click on simple , "Configuration properties", "general", "Configuration Type" and select "Dynamic Library (.dll)"

16. On the project tree, right click on simple , "Configuration properties", "Advanced", "Target File Extension" and select ".dll" intead of ".exe"

17. On the project tree, right click on simple and select "rebuild"

18. rename simple.dll as llama64.dll